The AI imperative - A clear need for systematic assessment

Coping with new ai tools such as ChatGPT requires thinking beyond grades

The Student360.Report is focused on the operational challenges of orienting higher education institutions to create systems and processes supporting student success. The companion Ex4Edu.Report focuses on the broader issues and challenges facing higher education. The two reports have alternating publication dates.

In the recent issue of the Ex4Edu.Report we started the discussion about how technologies such as ChatGPT and the growing array of a.i. tools will change higher education. In this issue of the Report, the topic of adapting assessment strategies and processes in light of the ChatGPT and the growing array of a.i. tools will be the key focus.

More than tests and grades

A few years ago, I was making a presentation to an economic development agency in Berlin about locating a venture in the region for data analytics to support higher education institutions. The meeting was fairly typical until the end when the proposal was dismissed as “just tests” and not worthy of further consideration or funding.

The view of some highly trained development officials, unfortunately, reflects a prevalent but mistaken view. A good friend, Ken Gergen of Swarthmore College, and I have had several similar discussions around his book written with Scherto Gill, “Beyond the Tyranny of Testing: Relational Evaluation in Education.”

What Ken and Scherto clearly identify is that educational assessment has been captured by a grades and testing mentality, or as Ken has put it, “the testing enterprise.” Breaking out of the testing and grades box requires moving beyond the assessment instruments (such as tests and grades) to thinking about what we can and hope to accomplish in education.

When I read articles predicting systems collapse in academics due to ChatGPT or any other ai technology, the authors are usually stuck in the mud of viewing assessment instruments as the whole rather than taking a broader view. It is almost like a farmer thinking that buying the latest tractor (i.e. technology) will increase crop yield. Yes, it might help, but it is not enough.

Dimensions of effective assessment

The first line of confusion in assessment is neglecting the level of focus. There are significant differences in evaluating the success of a course, an academic program, or an academic department or institution. Effective assessment plays an important but different role at each level.

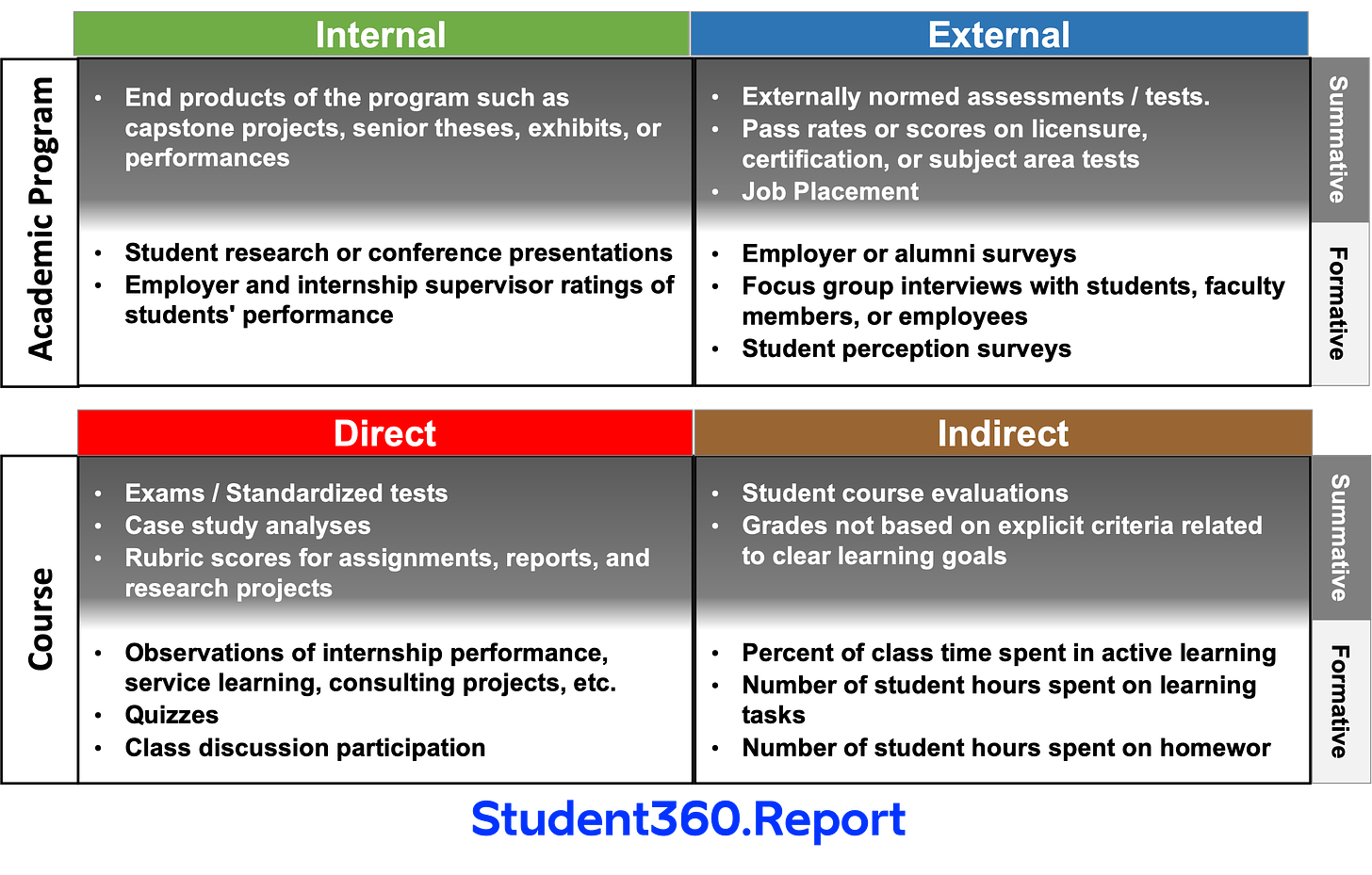

One tool that I have used to assist clients in understanding the difference is shown in the diagram below.

The diagram bundles several concepts into a single presentation. First, determining the effectiveness of a program differs from a course. An academic program should be “assessed” based on the overall success of its parts, which are courses. Courses, by contrast, are more granular, which directs the assessment focus to what happens as a course is taught.

Second, there is a distinction between summative and formative elements. Summative elements are what the name suggests—the summation of efforts. Formative elements are checkpoints along the way to achieving the final result.

Last, a distinction between internal and external is drawn at the program level and direct and indirect at the course level. The internal vs. external dimension is used at the program level because it is relevant to how stakeholders would view the success of a program in preparing students for functioning in the world. At the more granular level of the course, a direct vs. indirect distinction is drawn. Such a definition helps decide how to evaluate the outcomes of various learning activities. Tests appear at this level as a direct assessment but as one of many potential measures.

The internal vs. external and direct vs. indirect distinctions cannot capture the complexity of success at the institutional level.

There is a higher or “institutional” level, which is not shown in this diagram. When the discussion transitions to the success of an educational institution, department, or organization, the way of evaluating success also changes. Instead of assessment, the organizational level is more suitably evaluated based on what is termed institutional effectiveness. The effectiveness of an institution often reflects many non-academic factors, such as the facilities, funding, the nature of the population served, etc. Although these items certainly impact student success, the mixture of academic and non-academic areas requires a different approach. A future Student360.Report post will tackle the institutional level issues.

Moving beyond impoverished assessment

The current consternation with AI tools and their potential to disrupt higher education appears overblown. The current suite of tools consists primarily of large language models, which rely on available content to assemble what is essentially a web query on steroids. As explained by Peter Ziehen, these tools are programming and programmer intensive. Replacing the entirety of human learning capability is still years or even decades away at the earliest.

The infancy of ai models does not mean that impovished assessment is without risk.

Tools such as ChatGPT can pose a risk when an institution engages in what I term “impoverished assessment.” The hallmarks of such poor assessment are:

overreliance on a single type of assessment instrument, e.g. papers or simplistic multiple-choice tests,

neglecting the value of observational tools and methods common in indirect and external types of assessment, and

failure to consider the full dimensions of learning, such as experiential learning.

Moving beyond impoverished assessment requires thinking differently about the roles of the learner and teacher in an educational setting. It also requires recognition of the relational nature of the learning experience.

Embracing the multiple dimensions of the student

The shift to a balanced view of assessment starts with looking at capability as the central focal point. In short, what will be the impact of a learner or student after completing a program? Further, how will the collective impact of learners or students play out in society?

A full view of a student’s role in education recognizes that the student is a recipient of instruction (customer), the key actor (means of production), and the beneficiay (the outcome).

Framing capabilities in terms of the multiple roles of the student should be the starting design point for an assessment strategy. Key questions that should be addressed include:

How and how well are students learning and experiencing the learning journey?

Are students adequately equipped to learn successfully?

Can the institution demonstrate that students have mastered and have the abilities and attitudes to use the knowledge and skills in their lives?

Assessment by design - The imperative

The “jobs to be done” to develop effective and helpful assessment processes and instruments begin with a thoughtful design effort. Discussions of how assessment by design can be structured will be contained in future issues of the Student360.Report.